After this, I will then use poetry for package and dependency management ( not pip) by adding new packages with poetry add so that the required packages are tracked in the pyproject.toml and poetry.lock files. This is better than installing poetry inside your base environment because it’ll use whatever version of python is there and that might not be what you want. The side effect of this is that in the pyproject.toml file the python version will be set at the version you chose for that environment.

#ANACONDA CREATE ENVIRONMENT FROM REQUIREMENTS.TXT INSTALL#

Then I’ll activate that environment and pip install poetry. I create a new virtual environment first, where the python version in that environment is the minimum I’ll choose to support for that package.

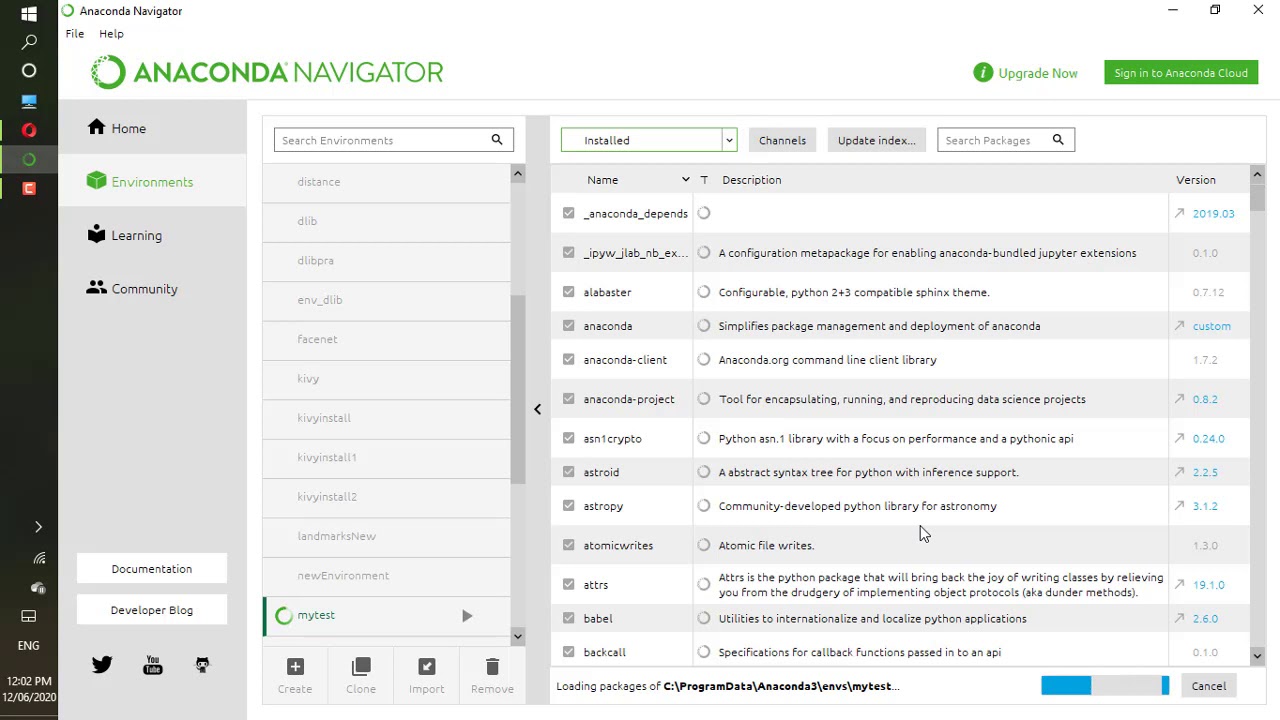

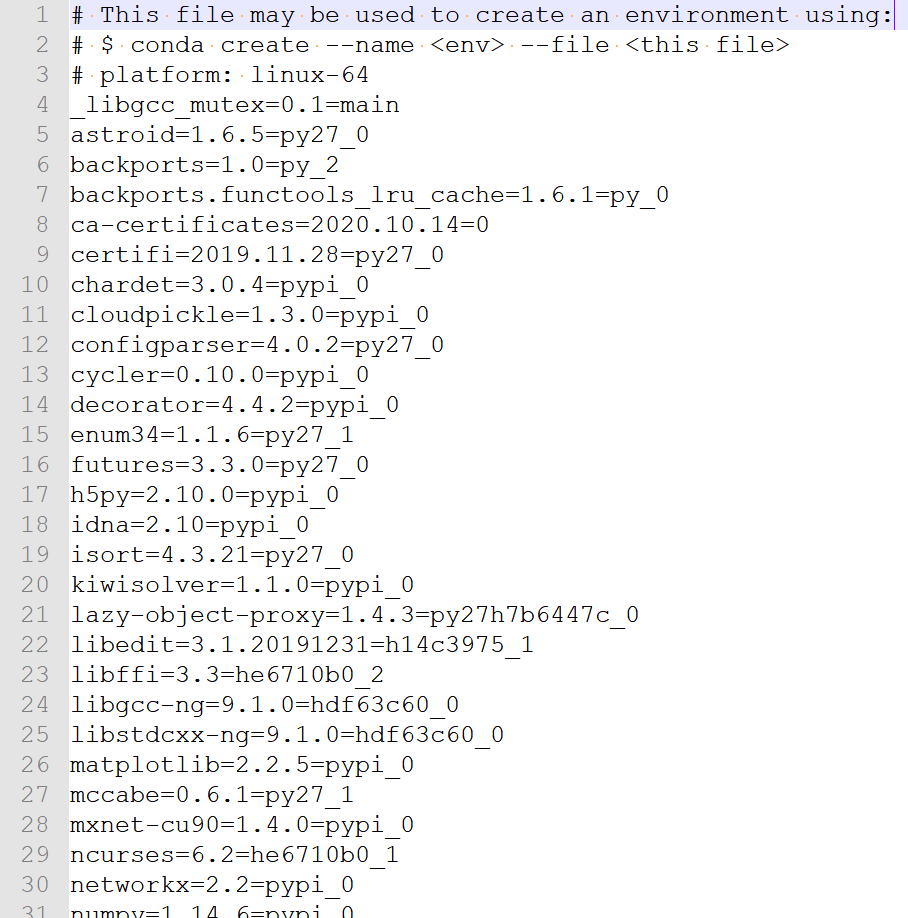

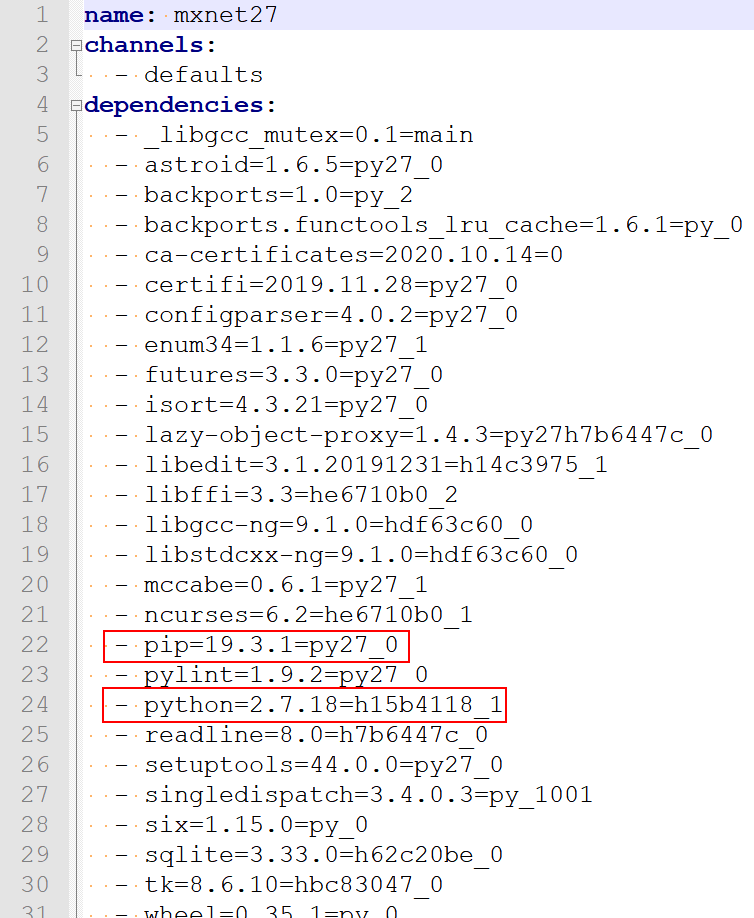

2 The side effect of imports working correctly is that you also don’t have to worry about some PATH nonsense with your test suite! It sets up pytest and gives an example test that also ✨just works✨. Every time I’ve tried to create a package manually I get lost for days in Stack Overflow ImportError hell. Here’s the main thing I like: it sets everything up so that relative and absolute imports ✨just work✨. More recently I have been creating packages using poetry. For most of my work I’m not creating a python package, I’m just following the previous process with conda and making sure my repositories include a requirements.txt that allows someone else to execute my code. anything that gets imported is in requirements.txt) and let any dependency versions resolve themselves when someone else does pip install -r requirements.txt. I know I could install everything I need and then just do a big pip freeze > requirements.txt, but I use the requirements.txt to indicate to any other user of this project what packages are primarily used (i.e. Then I’ll check what version was installed with pip freeze | grep pandas and copy that line to my requirements.txt. That is, if I need pandas, I’ll do a pip install pandas. If I’m doing a project that’s more analysis focused, or won’t result in a python package, I manually track the main packages installed. There is also a setting called Python › Terminal: Activate Env In Current Terminal you will want to turn on, otherwise sometimes the environment won’t activate if you have a terminal open in VSCode before the python extension loads. Select the environment you just created from this list. To do this in VSCode, regardless of the folder name, open the command palette ( ⇧⌘P) and find Python: Select Interpreter. Rather, I have it set up to automatically activate in VSCode and use the terminal there. However, I don’t typically do this to activate the environment. If you name the folder the same as the environment, you can do a 😎 cool trick 😎 where you activate the environment by the folder name by calling conda activate $. Using the above example, I’d make a folder called new_env. I create environments per project, so the environment is usually associated with a folder of the same name. I do this often so I created a bash function for it (put this in your ~/.bash_profile or ~/.zshrc). The command to create a new environment is conda create -n python=. I do not use conda’s environment.yml file except in rare circumstances. In short, I create environments with conda, then use pip install and a requirements.txt inside the environment. At the time (2014ish), there were some packages that were also easier to install via a conda install rather than a pip install, but I don’t think that’s been true since about 2017 1. This mostly a historical artifact: when I started programming as a data scientist, the easiest way to get up and running with python was through the Anaconda installer. I use conda (installed via miniconda) to manage virtual environments and python versions installed on my computer. Managing Python Versions & Virtual Environments: conda # All I know is that this works for me and I hope if you’re reading this it’ll help you find something that works for you.

I haven’t actually done a thorough evaluation of what’s available. The factors that I’ve determined are critically important for choosing these tools are as follows: I have a long list of criteria I’ve used to develop this workflow and have honed it over time since the start of my career as a data scientist. In this blog post I’ll walk you through the workflow I use for managing virtual environments and creating python packages.

0 kommentar(er)

0 kommentar(er)